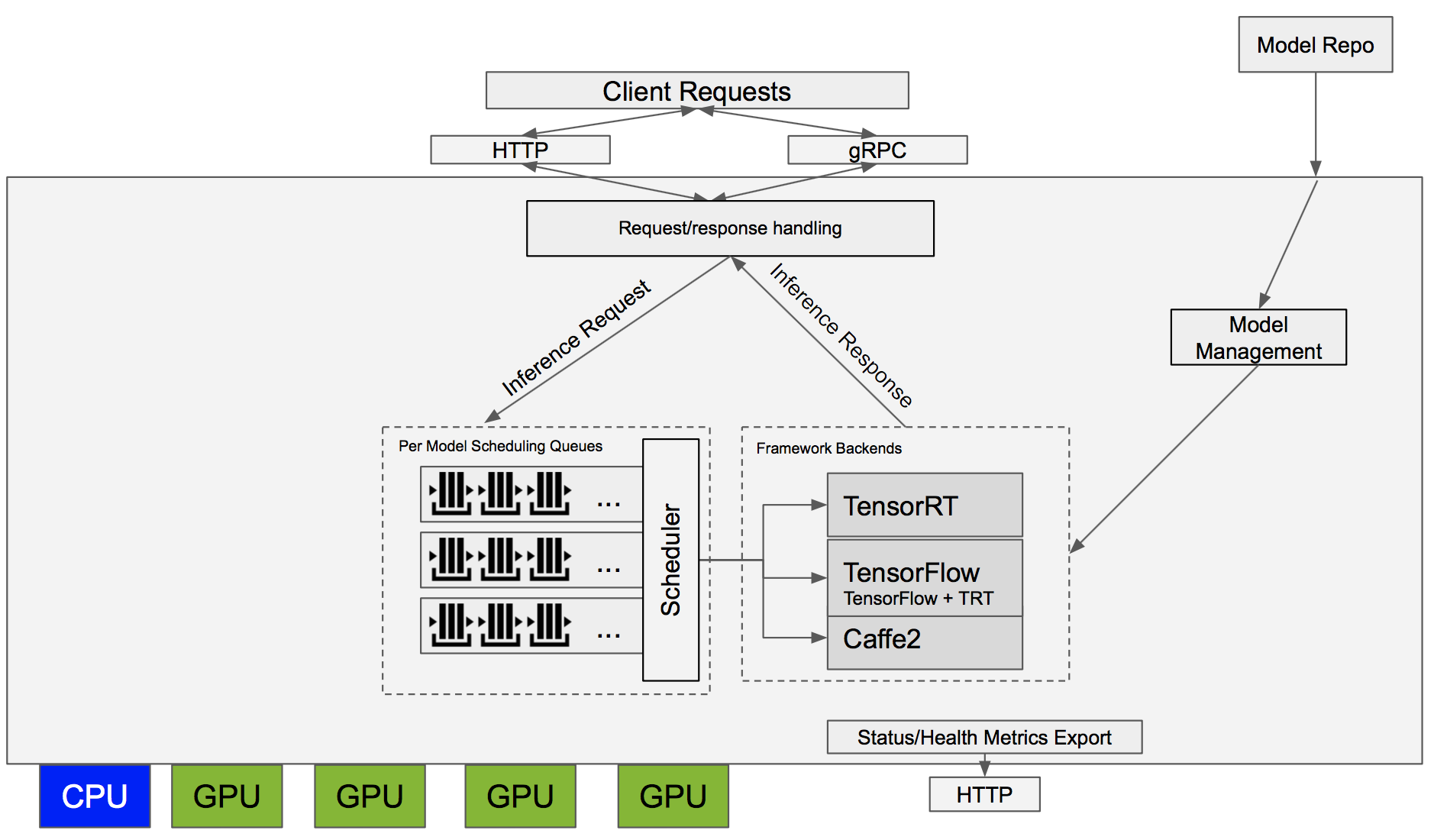

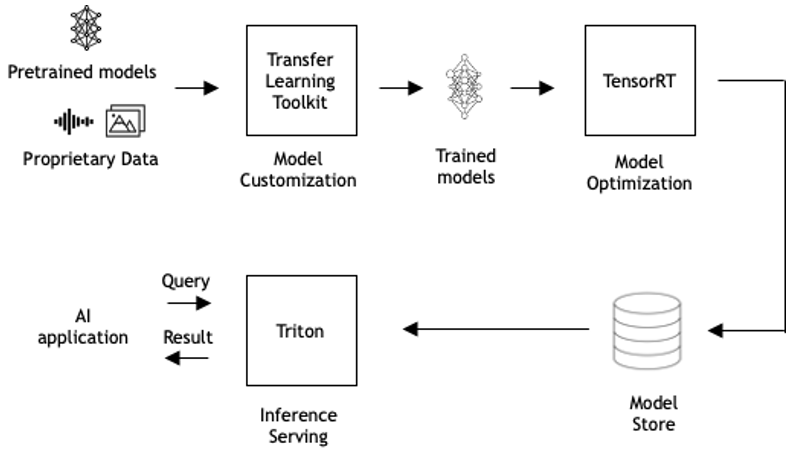

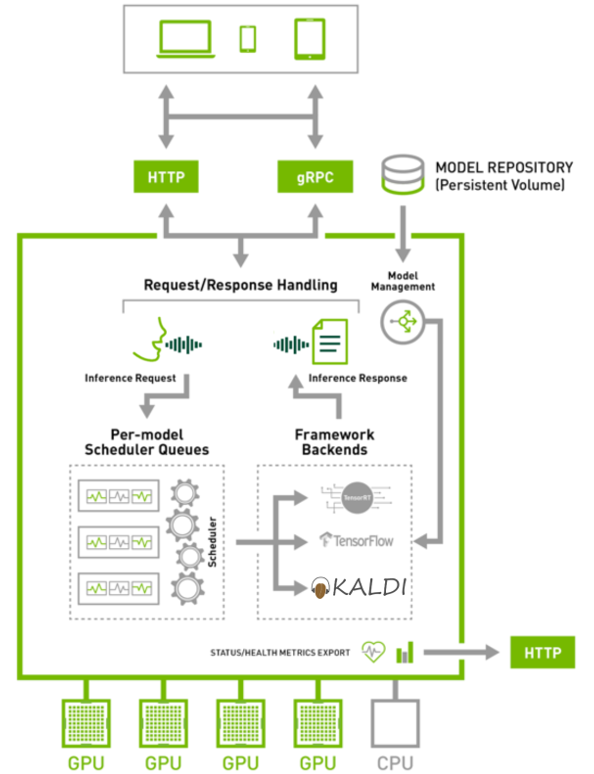

Serving and Managing ML models with Mlflow and Nvidia Triton Inference Server | by Ashwin Mudhol | Medium

Deploy fast and scalable AI with NVIDIA Triton Inference Server in Amazon SageMaker | AWS Machine Learning Blog

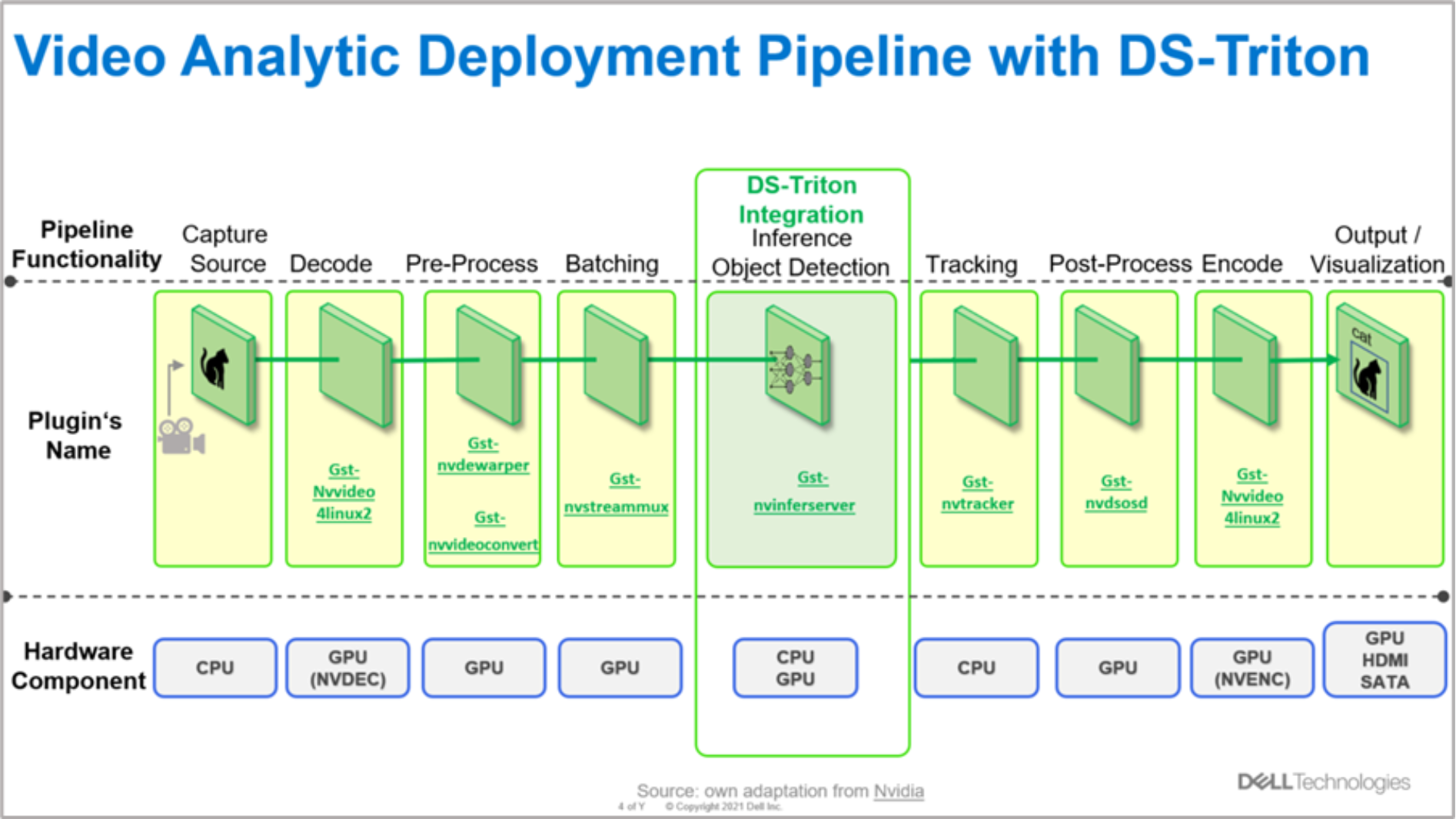

NVIDIA DeepStream and Triton integration | Developing and Deploying Vision AI with Dell and NVIDIA Metropolis | Dell Technologies Info Hub

Accelerated Inference for Large Transformer Models Using NVIDIA Triton Inference Server | NVIDIA Technical Blog

Serving Inference for LLMs: A Case Study with NVIDIA Triton Inference Server and Eleuther AI — CoreWeave

Accelerated Inference for Large Transformer Models Using NVIDIA Triton Inference Server | NVIDIA Technical Blog